Build your own MCP servers using N8N (Nocode)

You’re wasting hours building custom AI integrations that could be done visually in under 10 minutes.

Every new service means new code. Every API update breaks something. And you’re stuck maintaining integration spaghetti instead of building actual features.

Model Context Protocol (MCP) was supposed to fix this, it’s like USB-C for AI. But here’s what nobody’s saying, you don’t need to write a single line of Python to use it. n8n turns MCP development from a multi-day coding marathon into a drag-and-drop afternoon.

Why n8n works so well for MCP development

I’ll be honest, if you’re hand-coding MCP servers, you’re either unaware of better options or you enjoy pain.

Visual tool management

MCP server development requires defining tools, handling authentication, and managing server lifecycle, all through code. n8n flips this on its head by providing a visual interface where each workflow step becomes a potential MCP tool. You’re essentially building with building blocks instead of writing boilerplate.

Bidirectional MCP integration

What makes n8n unique in the MCP ecosystem is its bidirectional approach. You can turn n8n workflows into MCP servers that AI agents can call, and simultaneously use n8n as an MCP client to consume external tools. This dual capability means you can create sophisticated automation pipelines that bridge multiple MCP services without custom integration code.

Enterprise-ready infrastructure

n8n handles the operational concerns that often trip up custom MCP implementations: authentication, error handling, logging, and scalability. When you deploy an n8n MCP server, you’re getting production-ready infrastructure that can handle real workloads, not just proof-of-concept demos.

Building a developer assistant in 20 minutes

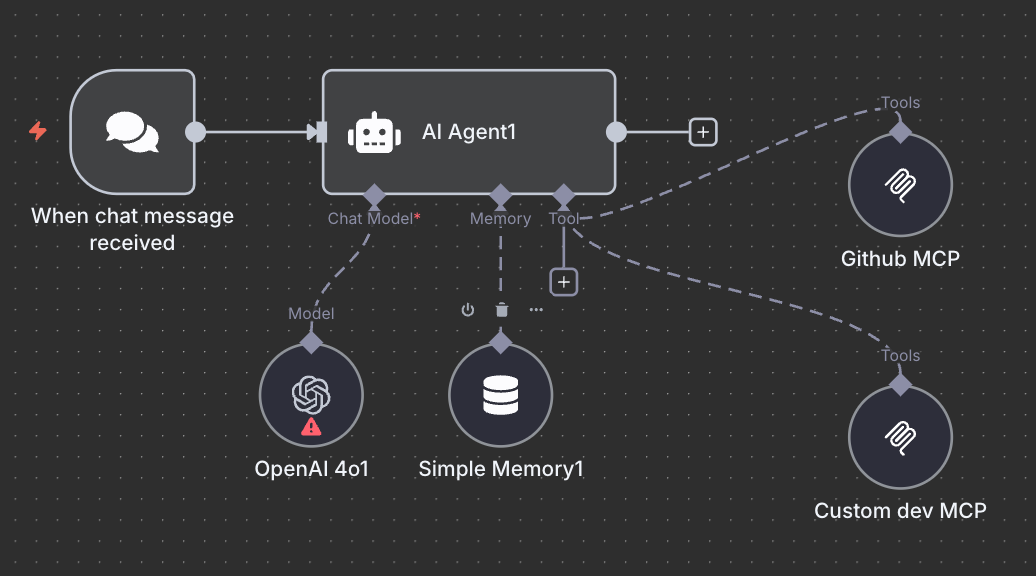

Let me show you something practical. We’re building a developer assistant that combines GitHub with custom code analysis tools. Two MCP servers. Zero Python files.

Architecture overview

Our developer assistant uses 2 MCP servers:

GitHub MCP server (external service integration)

get/comment on issues

list repository issues

read file contents

full GitHub API access

Custom dev tools MCP server (internal tools)

code quality analyzer

documentation generator

StackOverflow search

PR change summarizer

The AI agent discovers and orchestrates these tools automatically. No manual coordination required.

Prerequisites setup

You’ll need:

n8n instance (self-hosted or n8n Cloud)

community packages enabled:

N8N_COMMUNITY_PACKAGES_ALLOW_TOOL_USAGE=trueMCP community nodes installed:

n8n-nodes-mcpGitHub personal access token

OpenAI API key

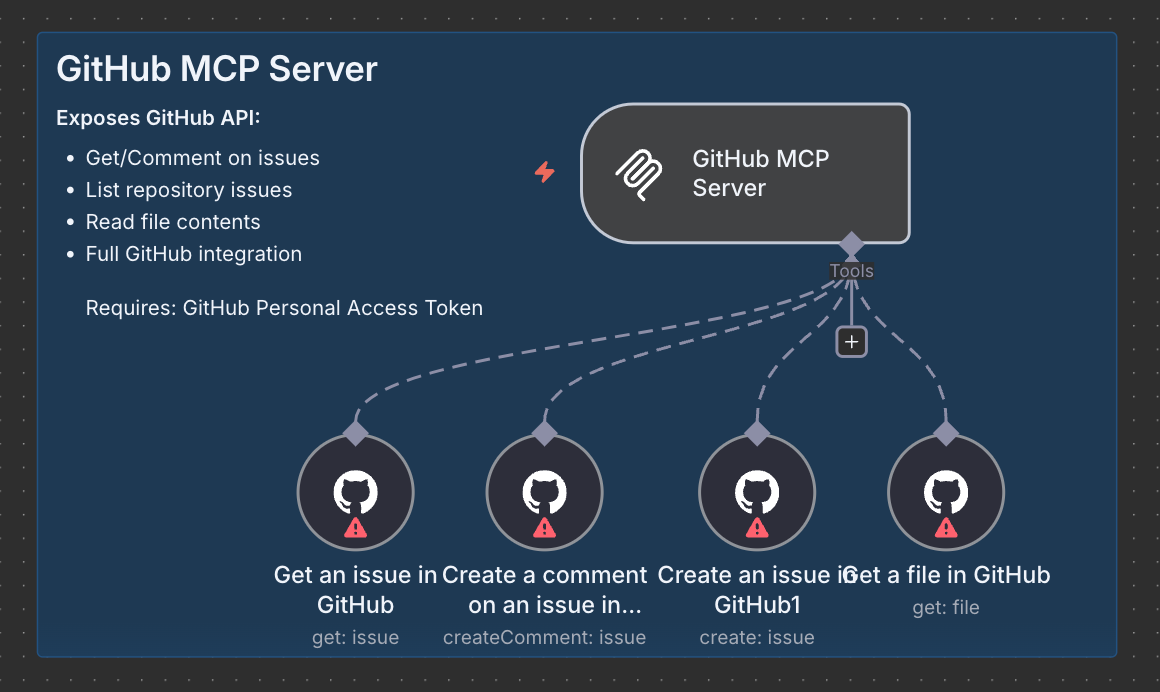

Implementing the GitHub MCP server

Step 1: create the MCP server trigger

Start by creating a new workflow and adding the MCP server trigger node. This transforms your workflow into an MCP server endpoint.

Node: MCP Server Trigger

Path: github-toolsThe trigger generates a unique URL like /mcp/github-tools/sse that MCP clients can connect to.

Step 2: add GitHub tool nodes

Connect GitHub nodes to your MCP trigger. Each becomes a discoverable tool:

Get issue node:

Resource: Issue

Operation: Get

Parameters: $fromAI(’issue_number’, ‘GitHub issue number’, ‘number’)Comment on issue node:

Resource: Issue

Operation: Create Comment

Issue Number: $fromAI(’issue_number’, ...)

Body: $fromAI(’comment_body’, ‘Comment text’, ‘string’)List issues node:

Resource: Repository

Operation: Get Issues

Limit: 10Get file node:

Resource: File

Operation: Get

File Path: $fromAI(’file_path’, ‘File path in repository’, ‘string’)Step 3: configure authentication

Add your GitHub personal access token to each GitHub node. The MCP server will handle this securely when tools are invoked.

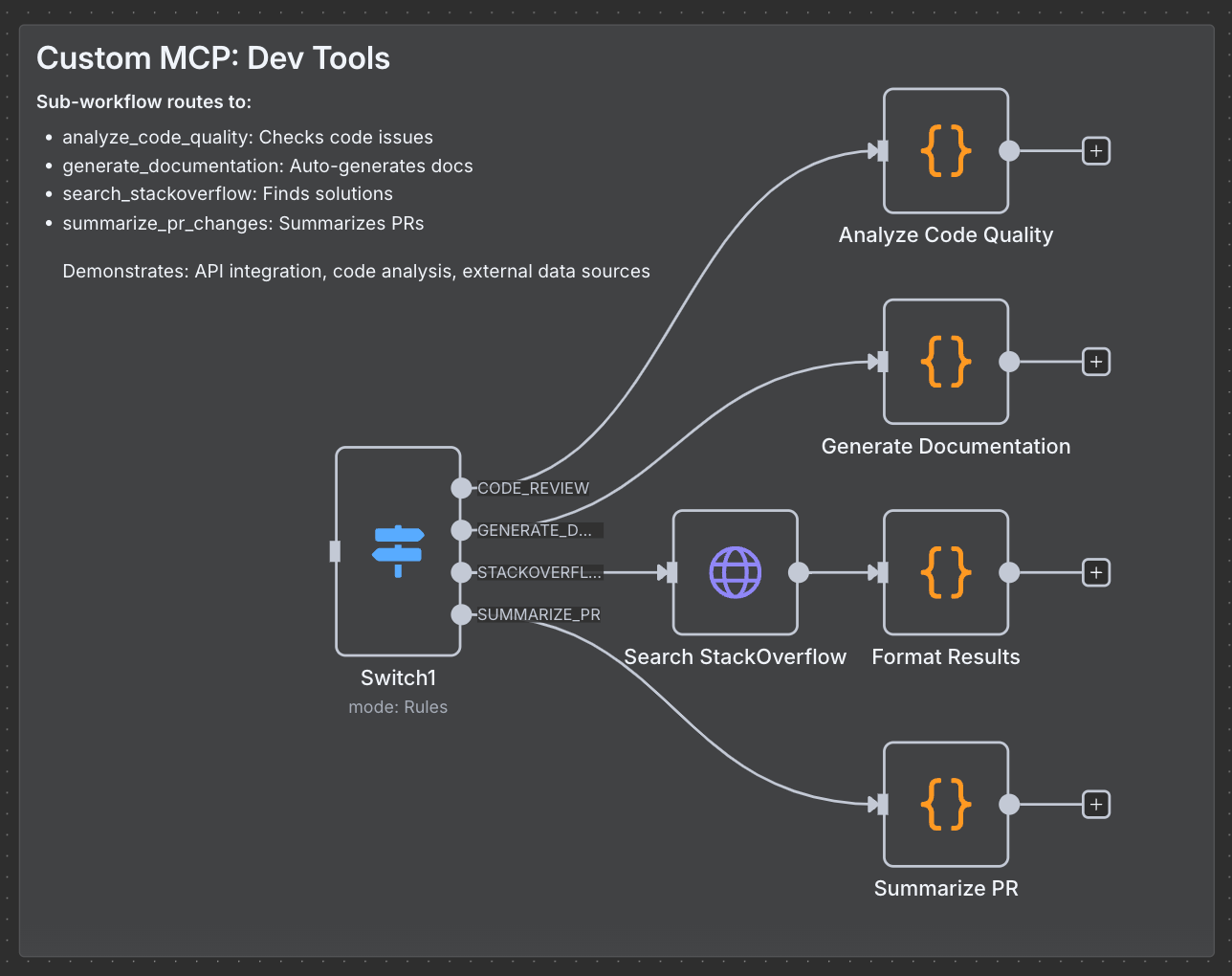

Building the custom dev tools MCP server

This is where MCP’s value really shines: creating specialized internal tools.

MCP Server Trigger (path: dev-tools)Execute Workflow Trigger (receives function calls)Switch Node (routes to appropriate tool)Tool Workflow Nodes (expose tools to MCP)

Tool 1: Code quality analyzer

What developers on Reddit actually want: something that catches console.logs, old var declarations, and nested if-hell before code review.

How it works in n8n:

Add a Code node

Check for common issues (debug statements, old syntax, missing comments)

Return a JSON score and suggestions

Skip the fancy static analysis. The 80/20 rule applies, catching obvious issues catches 80% of problems. Your custom tool can focus on your team’s specific code smells.

Tool 2: StackOverflow search

Use an HTTP request node hitting StackOverflow’s API. Format the results. Done.

Always use

{dynamicTyping: true, skipEmptyLines: true}when parsing external API responses. Trust me on this one.

Tool 3: PR summariser

Takes GitHub PR data and returns a human readable summary. Perfect for daily standups.

Keep summaries short. A good summary is 1-2 sentences max. The AI can always fetch more details if needed.

Connecting your AI agent

Now comes the fun part: letting your AI agent discover and use these tools automatically.

Add MCP Client nodes pointing to your server endpoints

Configure your AI agent (OpenAI GPT-4, Claude, whatever)

Add a Chat Trigger for the web interface

System prompt that actually works:

You are a developer assistant. You help with code reviews,

documentation, and development workflows. You have access

to GitHub and custom dev tools. Use them proactively.Don’t over-engineer your system prompts. Be clear, be direct, be brief.

2 real examples

Analyze this code quality

function test() {

var x = 1;

console.log(x);

if (x) {

if (x > 0) {

if (x < 10) {

return x;

}

}

}

}Agent response:

"Score: 70/100. Issues: debug statements, old var declarations, deep nesting. Suggestions: Remove console.log, use const/let, reduce nesting depth."

The AI automatically chose the right tool, executed it, and formatted the response.

“Search StackOverflow for async/await best practices, then generate documentation for my async function”

Agent response:

“Found 5 relevant posts. Based on those, here’s your documentation...”

This required ZERO configuration beyond defining the tools. The AI agent figured out it needed two tools and orchestrated them automatically.

My tips

After digging through Reddit threads, n8n forums, and GitHub issues, here’s what experienced users actually do:

Start small, then scale

Maybe automate something simple, like notifying your team when a new client signs up. As you get comfortable, you can build more complex workflows.

Why this matters: Complex workflows are just simple workflows connected together. Master the basics first.

Use Sub-workflows for complex agents

From n8n forums: for complex AI agents with many tools, use the Call Workflow node. Each tool becomes a separate workflow.

Benefits:

Easier to debug (test tools individually)

Better organization (not one giant workflow)

No performance penalty on n8n Cloud

Simple agents with 2-3 tools? Just use built-in nodes. Don’t over-engineer.

Naming conventions save lives

The biggest complaint on Reddit is messy workflows that nobody can understand 3 months later.

What works:

Name every node descriptively

Use consistent patterns (

Get-[Thing],Check-[Condition],Send-[Action])Add notes for complex logic

Document weird workarounds immediately

Error handling

Production workflows WILL fail. APIs timeout. Services go down. Users send garbage data.

Essential practices:

Add Error Trigger nodes to catch failures

Set up retry logic (3 attempts with exponential backoff)

Send alerts to Slack/email when things break

Log everything—you’ll need it for debugging

n8n’s error handling saved us hundreds of hours monthly. We get alerted immediately when something breaks, with full context.

Security

Do these things:

Store API keys in n8n’s credential manager (NEVER in code)

Use bearer token authentication for production MCP endpoints

Run behind a reverse proxy (nginx/Caddy)

Enable rate limiting

Set up environment variables properly

Most security breaches happen because someone hardcoded credentials “just for testing.” Don’t be that person.

Common issues

MCP client can’t connect

Check these:

Is the workflow activated?

Does the MCP path match your client config exactly?

Are SSE connections allowed through your firewall?

Is your SSL certificate valid?

When in doubt, check the execution logs. They’re there for a reason.

Tools aren’t appearing in my AI agent

Fix these:

Clear, descriptive tool descriptions (the AI uses these!)

Proper

$fromAI()parameter definitionsRestart your AI agent after adding tools

Check that tool nodes are actually connected to the MCP trigger

90% of missing tools issues are unclear descriptions. The AI needs to understand when to use each tool.

Everything is slow

Optimize:

Use caching for frequently accessed data

Set reasonable HTTP timeouts (don’t wait forever)

Monitor your n8n instance resources

Consider using async operations

If a tool takes >5 seconds, users will complain. If it takes >10 seconds, they’ll assume it’s broken.

Conclusion

You can keep hand-coding MCP servers if you want. Some problems need custom code. But for 80% of use cases? Visual development is faster, more maintainable, and easier to hand off. We now use a similar workflow at Moqa Studio.

Like and drop a comment below if you want the complete workflow JSON to get started. Way easier than building from scratch.

Also, if you found this useful, share it with a developer who’s still writing custom integrations by hand.

Now stop reading and go build something.